Own Your Data With Data Streaming

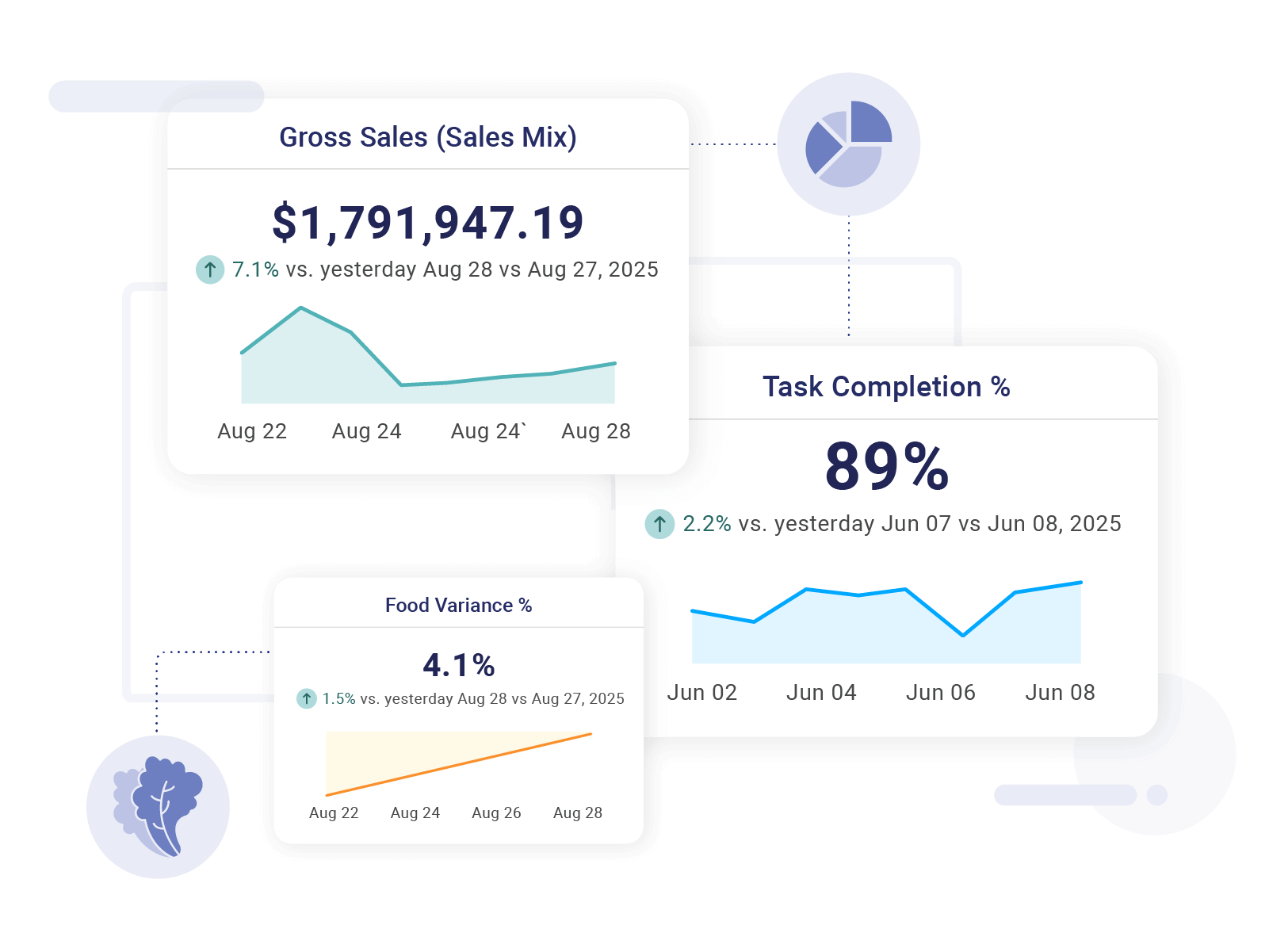

Gain complete control of your Crunchtime data to power your own dashboards and analysis.

Your data to do what you want

We made Data Streaming so you can analyze your data the way you want.

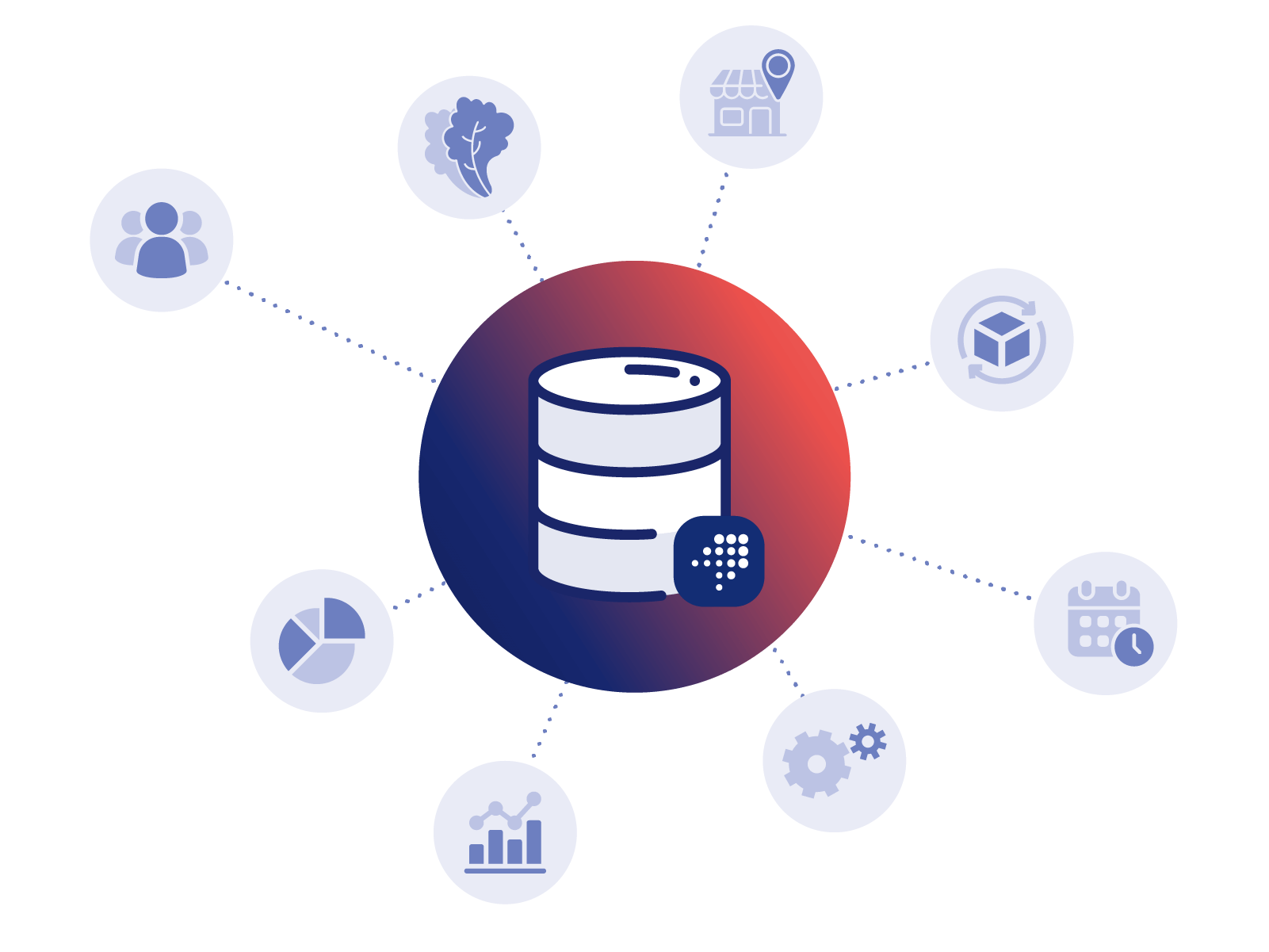

Data is captured in Crunchtime Apps

(Inventory counts, employee hours worked, etc.)

Data moves to Crunchtime Database

Crunchtime streams data to your Snowflake environment

Built for data experts

Data Streaming is designed with data professionals in mind. It gives you full control over your data, making it easy to extract, transform, and analyze large volumes of data exactly how you need.

No. We choose Snowflake because it is an industry leader in data lakes and reporting, in addition to supporting egress from Snowflake to virtually every popular database.

Crunchtime will manage the streaming data replication to a Snowflake data warehouse

operated by you. This includes:

- Monitoring the stream and availability of data delivery to the Snowflake data warehouse.

- Synchronizing eligible tables and columns to the Snowflake data warehouse orchestrated with schema changes in the products as new features are created.

Up to 4 hours for inventory and labor and up to 12 hours for Zenput. This means a change made in the system will become visible in the Snowflake data warehouse within 4 or 12 hours of creation.

changes are moved to the Snowflake environment as they are found. This is not a

“batch” process.